Great news, the Parallella is now a real computer!! The gigabit ethernet port is working and the full Ubuntu desktop version is up and running! We had some scary moments this week, but in the end everything worked out. Sometimes it’s really worth considering the old advice “if it ain’t broke, don’t fix it”. Amazing how the most innocent of design changes can cause such major headaches at times…

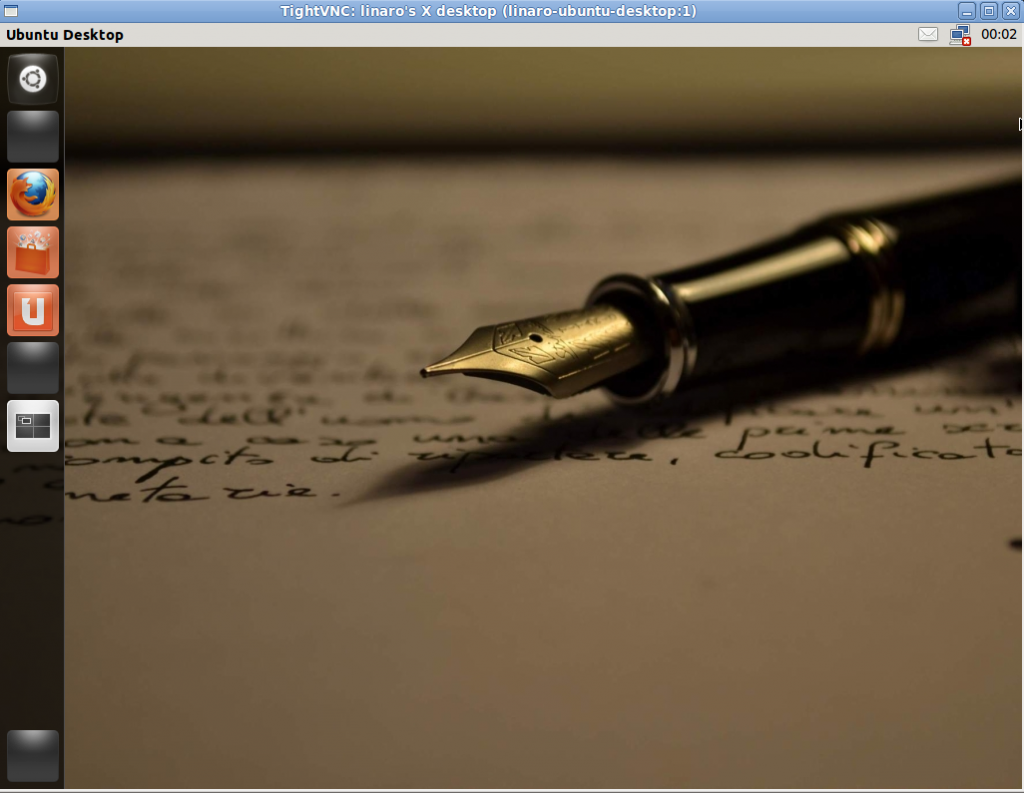

The picture below shows the Ubuntu desktop as seen within the TightVNC client running on a PC. At this point, the Parallella can be used as a “headless” low power server. The next critical step will be to ramp up HDMI and USB functionality so that the Parallella can be used as a standalone computer.

The next two weeks will be filled with addition board bring-up work and extensive Parallella validation and testing. We need to make sure any and all major issues are rung out before we send out the first 100 prototypes to Kickstarter backers who signed up for Parallella early access. At this point we are aiming to ship these early boards by the second week of June.

We will be posting some SIGNIFICANT Parallella related news over the next few weeks so don’t go far! The best way stay up to date on Parallella news is to follow us on twitter: (@adapteva and @parallellaboard)