[UPDATE 3/8/2016 We were not accepted into GSOC, but the Fossi Foundation has graciously agreed to take us under their wings. We will be accepting applications for the RISC-V and SDR projects]

We are applying to 2016 Google Summer of Code as a mentoring organization. Below you will find a list of initial project ideas. If you are interested in becoming a project mentor or if you are an interested student please don’t hesitate to reach out. These are just ideas, once accepted as an organization we are open to any and all suggestions.

Learn more about Google Summer of Code

SCHEDULE

- 19 February: Mentoring organization application deadline.

- 29 February: List of accepted mentoring organizations published on the Google Summer of Code 2016 site.

- 25 March: Student application deadline.

- 22 April: accepted student proposals announced on the Google Summer of Code 2016 site.

- 23 May: Students begin coding for their Google Summer of Code projects; Google begins issuing initial student payments provided tax forms/students are in good standing

- 20 June: Mentors and students can begin submitting mid-term evaluations.

- 27 June: Mid-term evaluations deadline; Google begins issuing mid-term student payments provided passing student survey is on file.

- 15-23 August: Final week: Students tidy code, write tests, improve documentation and submit their code sample. Students also submit their final mentor evaluation.

- 30 August: Final results of Google Summer of Code 2016 announced

PROJECT IDEAS (through Fossi)

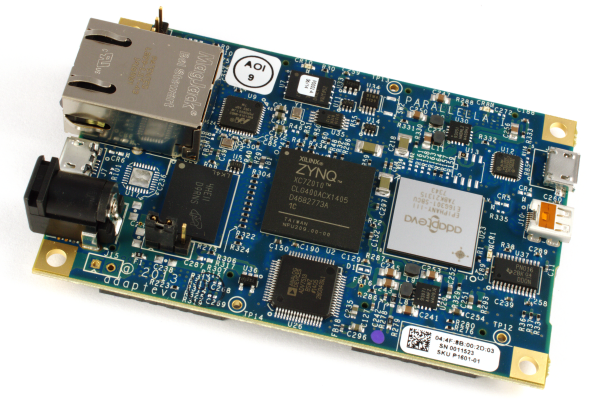

Port RISC-V core to Parallella

Summary: The RISC-V is an exciting new open source processor architecture founded at UC Berkeley. A test environment has been created for the Zynq based Zedboard development platform. Given that there are over 10,000 Parallellas in the field in 75 countries and 200 universities, it would be a great services to computer architecture researchers if there was a ready to run reference implementation of the RISC-V rocket core running in the Zynq FPGA on the open source Parallella board

Link: https://github.com/ucb-bar/fpga-zynq

Language: Verilog, C, Chisel

Mentor: “Andreas Olofsson” andreas@adapteva.com

Create a Parallella acceleration framework for GNU radio

Summary: GNU radio is an immensly powerful framework for software defined radio, but it’s only as good as its built in hardware support. Currently, there is support for SIMD, GPUs, and FPGAs, but there is no support for manycore array accelerators like Epiphany.The project involves creating the equivalent of the following accelerator (done in an FPGA) for the Epiphany manycore accelerator. All code should be written in “high level” C to maximize portability between manycore architecture. Reference: https://gnuradio.org/redmine/projects/gnuradio/wiki/Zynq/35

Language: Python/C

License: GPL/MIT

Mentor: “Andreas Olofsson” andreas@adapteva.com

NOTE: The project ideas have been put on hold.

Extend PAL library with more advanced vision functions

Summary: The Parallel Architectures Library (PAL) is a compact C library with optimized routines for math, synchronization, and inter-processor communication. Any sane and informed person knows that the future of computing is massively parallel. Unfortunately the energy needed to escape the current “von Neumann potential well” seems to be approaching infinity. The legacy programming stack is so effective and so easy to use that developers and companies simply cannot afford to choose the better (parallel) solution. To make parallel computing ubiquitous our only choice is to rewrite the whole software stack from scratch, including: algorithms, run-times, libraries, and applications. The goal of the Parallel Architectures Library project is to establish the lowest layer of this brave new programming stack. The We have started this effort at Currently the library contains a fair number of simple functions as a start, but to make it really useful another level of complexity is needed to more closely match OpenCV (generally considered the standard in the field). The goal is to implement some of the most useful higher level image processing functions. The kernels should be implemented as single threaded code and architecture agnostic.

Link: https://github.com/parallella/pal

Language: C

License: Apache

Mentor: “Ola Jeppsson” ola@adapteva.com

Implement software caching on Epiphany

Summary: Hardware caching wastes significant energy, is non-deterministic, and scales poorly to thousands of cores. Epiphany represents a class of architecture that includes closely coupled memory instead of automatic hardware caching to save power and area. The challenge with these types of processors is that the developer (or a program) must manage program and data memory. Epiphany and other similar architectures would benefit greatly from having an open source reference implementation for doing effective “per function” software caching. An initial prototype has been created here:

Link: https://github.com/adapteva/epiphany-sdk/wiki/Software-caching

Language: C/assembly

License: GPL

Mentor: “Ola Jeppsson” ola@adapteva.com

Create a BLAS code generator for manycore processors

Link: https://github.com/parallella/pal

Summary: The BLAS library is a corner stone of many scientific computing applications. Every hardware vendor generally implements proprietary highly tuned implementations. Currently there is no known open source implementation for manycore RISC type architectures (like Epiphany). Optimized “hard coded” open source routines have been written for the Epiphany and there is even an MPI implementation, but there is no code base currently available that scales with a large number of cores (like 1,000). The generator should be built on top of the PAL library and take N (number of points) and M (number of cores) as arguments to generate code that can be scheduled on a very large 2D array of cores.

Link: https://github.com/parallella/pal

Language: C

License: Apache 2.0

Mentor: “Andreas Olofsson” andreas@adapteva.com

Create an FFT code generator for manycore processors

Summary: The FFTW library (and equivalent libraries from hardware libraries) is a corner stone of all signal processing applications. Currently there is no known open source implementation for manycore RISC type architectures (like Epiphany). Optimized “hard coded” open source routines have been written for the Epiphany and there is even an MPI implementation, but there is no code base currently available that scales with a large number of cores (like 1,000). The generator should be built on top of the PAL library and take N (number of points) and M (number of cores) as arguments to generate code that can be scheduled on a very large 2D array of cores.

Link: https://github.com/parallella/pal

Language: C

License: Apache 2.0

Mentor: “Andreas Olofsson” andreas@adapteva.com

Create a 4,096 core Parallella board

Summary: When it comes to algorithm scaling, “the proof is in the pudding.” Simulation is a good start, but only if the simulator is cycle accurate. Actually proving the performance scaling requires real hardware. Commercial GPUs are available that supports launching thousands of parallel threads, but there is not a good hardware reference platform for 2D array architectures. The Epiphany chip currently in existence are based on 65nm technology and unfortunately only have 16 cores. Fortunately, the Epiphany devices include chip to chip links, allowing the devices to scale up to thousands of cores on single board. If built, such an open source development platform would be a great tool for researchers interesting in testing scalability of cutting edge parallel algorithms.

Link: https://github.com/parallella/parallella-hw/tree/master/parallella-4k/docs

Language: Schematic entry, PCB design

License: MIT

Mentor: “Andreas Olofsson” andreas@adapteva.com

[An interesting project, but clearly not “code” so probably not well suited for Google Summer of CODE. We will find some other way to support this development]

The Epiphany chip currently in existence are based on 65nm technology. What a pity! 28nm and more advanced technologies may be two expensive for small fabless companies. But now China provides some oppotunies to them. HLMC and SMIC are designing their 28nm process. (http://www.hlmc.cn/en/ http://www.smics.com/eng/index.php)

SMIC’s HKMG process is not mature. HLMC’s PolySion 28nm is not mature yet (neither is its HKMG). If you choose to manufacture chips at these fabs, they may offer good price to you.

Certainly, the cost is low yield. You can overcome this through some redundancy. For example, make two CPU cores as a base hardmacro of the mesh array but actually design three cores in every hardmacro. If two of the three cores work, the mesh can work.

If you are interested, I have a friend working as an agency of SMIC and HLMC. I’d like introduce him to you. I also know some companies with good and cheap flip-chip solutions.

I didn’t see Parallella in organization list. Parallella was selected?

I am very impressed with parallella, and andreas. I would very much like to collaborate. I write parallel software and I believe I can target epiphany. The problem is I need to test scalability, today that means thousands of threads. I believe the best application for computers is simulation, towards that end How about a GPU based 4096 core simulator. It would not be cycle accurate but would simulate local memory cacheless SIMD processors in an application specific way. It would allow the architecture of DMA and Network traffic with software only caches to be optimized at scale. Thanks for your insights. I will probably pursue something along these lines anyway but I would really appreciate your comments. I very much appreciate all you have learned with parallella.

[…] Google Summer of Code 2016 […]